Over the years I’ve looked at countless new packages, frameworks, and tools that come along on a seemingly endless basis. I love that software moves forward so quickly, but it seems to take an increasing amount of time to work out exactly what I’m looking at, and why I should be interested.

These days, all developer software tools and products seem to launch with websites that could easily be advertising consumer products, they’re that flashy. Even something somewhat niche like a monorepo-specific JS build system has a website that could be a sales page for Apple products.

While it looks nice and polished, I’ve developed an appreciation for something which gets straight to the point. It’s refreshing when it appears to value my time and makes it easy to understand what I’m looking at. Even more so when my default position is that I’m not interested, since I always aim to be boring.

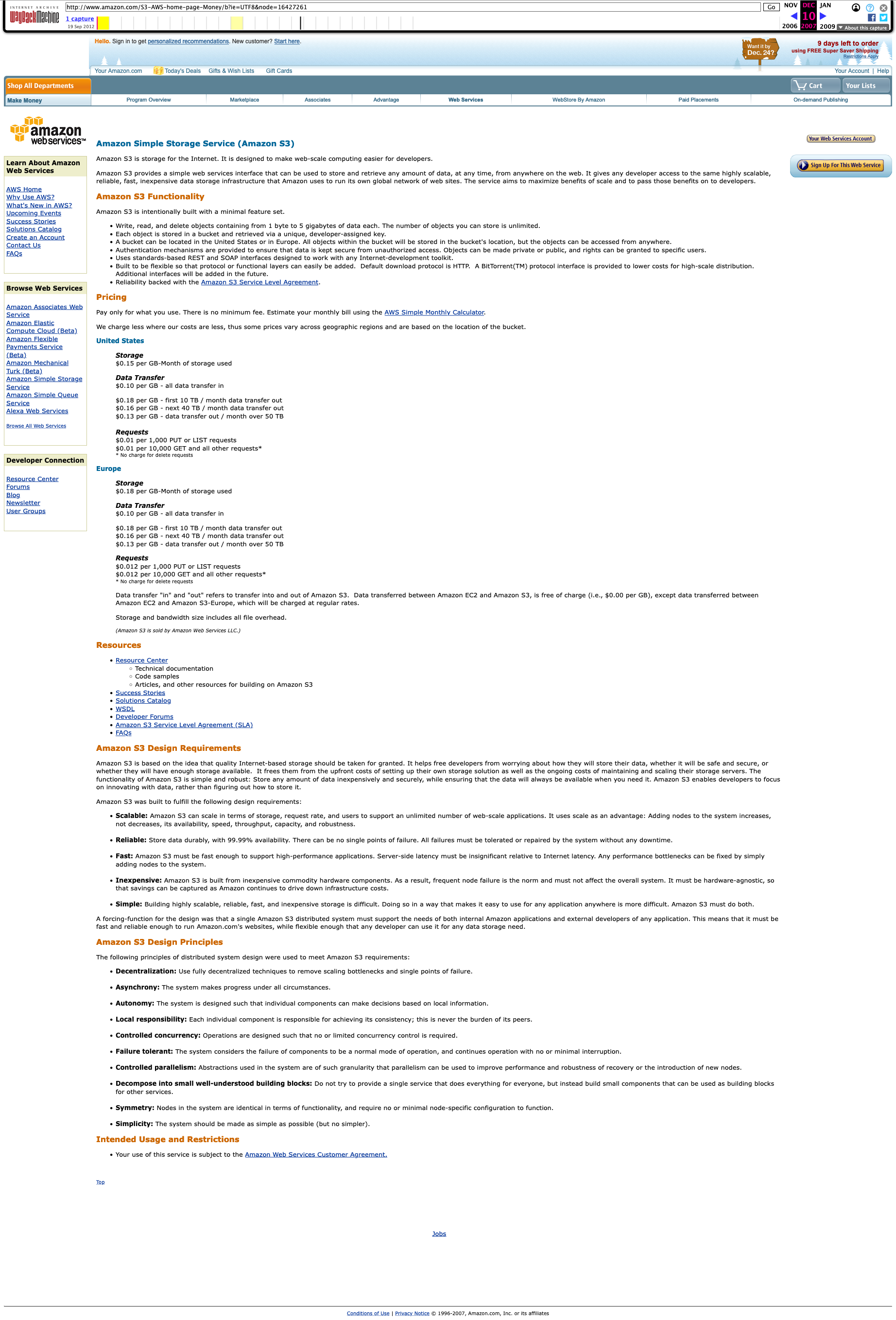

Of course in the ’early’ days, pages often looked boring because browsers were barely capable of anything else. But I’m going to give Amazon the benefit of the doubt for their launch page on S3, which was clearly written by engineers, for engineers. Thanks to the internet archive, we can see what S3’s marketing page looked like in 2007:

What’s notable is that while it looks dated, it communicates clearly exactly what it is and why you should use it, in a way that puts most modern site to shame.

Amazon S3 is storage for the Internet. It is designed to make web-scale computing easier for developers.

Amazon S3 provides a simple web services interface that can be used to store and retrieve any amount of data, at any time, from anywhere on the web.

Everything is presented on a single page. Nothing is hidden behind dark UI patterns. Pricing info is laid out in a heading that is second only to a functionality summary, and it’s followed by design requirements and principles that led to the creation of S3.

What’s also interesting is that these principles are just as applicable today (if not more so) than 15 years ago, and not just for a distributed-as-a-feature service like S3. These principles of distributed systems are certainly very well understood, but it’s nice to have a reminder as chances are they’re highly relevant to whatever I’m working on today.

- Decentralization: Use fully decentralized techniques to remove scaling bottlenecks and single points of failure.

- Asynchrony: The system makes progress under all circumstances.

- Autonomy: The system is designed such that individual components can make decisions based on local information.

- Local responsibility: Each individual component is responsible for achieving its consistency; this is never the burden of its peers.

- Controlled concurrency: Operations are designed such that no or limited concurrency control is required.

- Failure tolerant: The system considers the failure of components to be a normal mode of operation, and continues a operation with no or >minimal interruption.

- Controlled parallelism: Abstractions used in the system are of such granularity that parallelism can be used to improve performance and >robustness of recovery or the introduction of new nodes.

- Decompose into small well-understood building blocks: Do not try to provide a single service that does everything for everyone, but instead >build small components that can be used as building blocks

for other services.

- Symmetry: Nodes in the system are identical in terms of functionality, and require no or minimal node-specific configuration to function

- Simplicity: The system should be made as simple as possible (but no simpler).